Ensuring "Apples-to-Apples" Forecast Comparison

Author: Eric Gilgenbach

Date Created:

Overview

The purpose of this article is to highlight the importance of synchronizing your comparison periods and dimensionality of your SensibleAI Forecast results and your benchmark comparison forecast to ensure an “apples-to-apples” accuracy comparison

Example Walkthrough

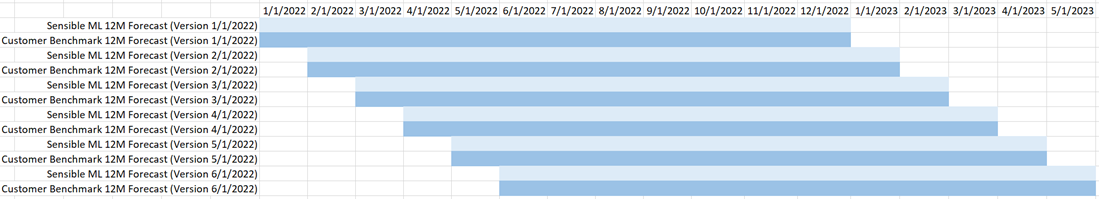

It would be incorrect, for example, to stack together the last 8 months of a 12-month SensibleAI Forecast forecast created on 1/1/2022 with the first 8 months of a 12-month customer benchmark forecast that began on 5/1/2022.

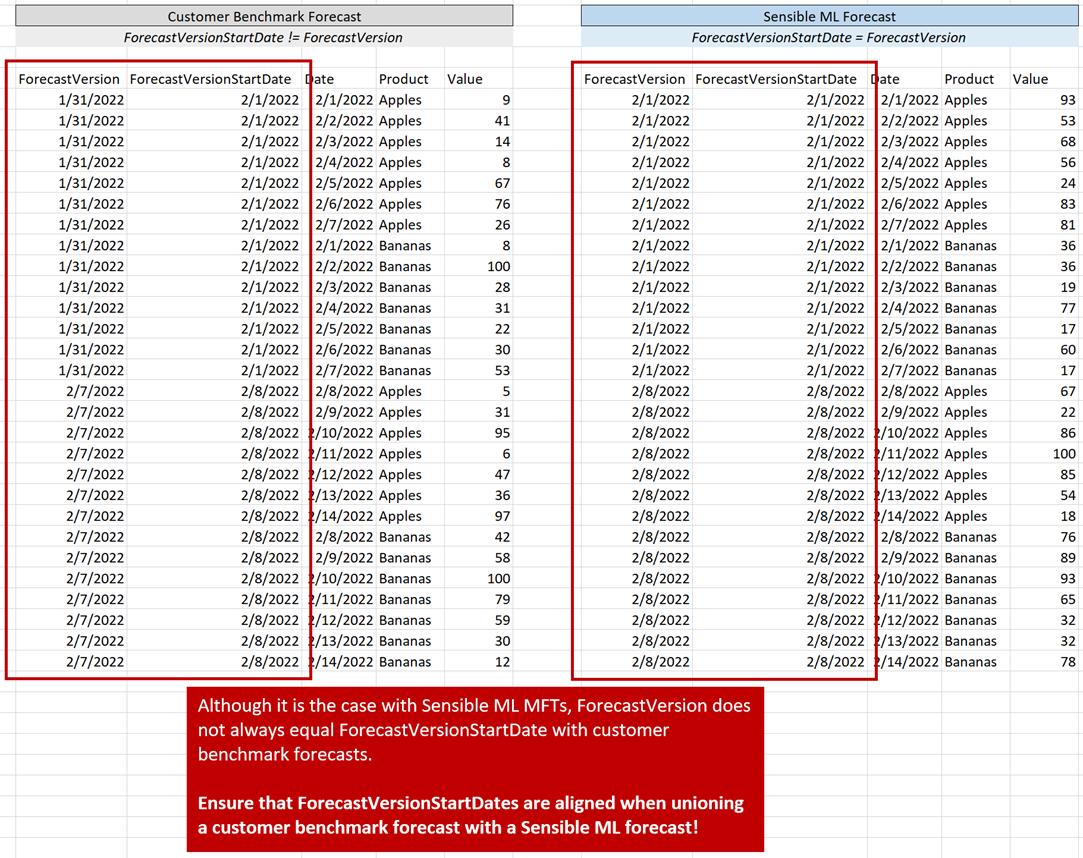

At a more granular level, it is important to ensure that the start dates of a ForecastStartDate sync up when unioning a benchmark forecast to a ready-for-ingestion FVA Table.

For example, a 30-day customer benchmark forecast with values between 2/1/2022 and 3/2/2022 might have ForecastStartDate of 1/31/2022, meaning that it was run on 1/31/2022 and begins on 2/1/2022. SensibleAI Forecast, for that same forecast window, might have a ForecastStartDate of 2/1/2022, as the ForecastStartDate of SensibleAI Forecast is always the first date of that forecast.

Thus, the 1/31/2022 customer benchmark forecast should be lined up against the 2/1/2022 SensibleAI Forecast forecast. This can be tricky, but a power user should ensure that lining up ForecastStartDates across different forecasts is completed as correctly as possible.

The image below demonstrates this concept:

Summary

In conclusion, the accuracy and reliability of forecast comparisons between SensibleAI Forecast and customer benchmark forecasts depend significantly on proper synchronization of forecast periods and dimensions. Ensuring aligned ForecastStartDates and carefully matching forecast windows is critical to avoid misinterpretations and inaccurate assessments of forecast performance. Power users play an essential role in verifying and maintaining this alignment, ultimately enabling meaningful, precise, and actionable insights through a true "apples-to-apples" comparison.