SensibleAI Forecast: Data Validation & Exploration

Prior to beginning Rapid Project Experimentation (RPE), a SensibleAI Forecast implementor must verify that the data quality is sufficient by ensuring the below criteria are met. This can be accomplished through SQL manipulations using Query Composer within AI Data Manipulator (DMA).

Data Validation Checks

No Duplicate Values

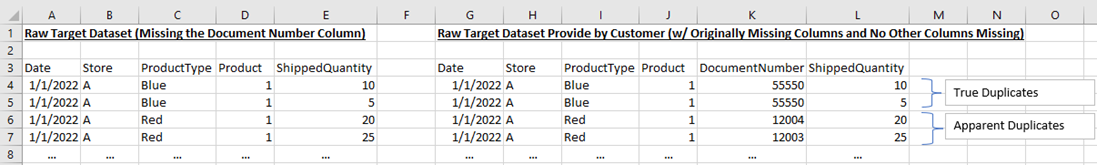

In the final, aggregated (by target dimensions and desired frequency) target dataset, there can be no duplicate intersections (Target Dims ~ Location ~ Date). However, a consultant should also be aware of duplicates in the raw, unaggregated source target dataset. If we aggregate a raw source target dataset that has true duplicates, our final target dataset will be incorrect. The example below demonstrates the difference between “true duplicates” and “apparent duplicates” based on added uniqueness from columns that were not included in the originally provided raw source target dataset:

In the example above, a customer provides a SensibleAI Forecast implementor with a dataset that is missing an additional column adding uniqueness to each observation. We can see that the intersection of A ~ Red ~ 1 on Jan 1, 2022 is an apparent duplicate because, when incorporating the extra column that was not originally provided to the SensibleAI Forecast implementor , the observation is unique. However, with all columns included, it appears that there is a true duplicate for intersection A ~ Blue ~ 1 on Jan 1, 2022. This would signal a data quality issue. The moral of the story is to ensure that a SensibleAI Forecast implementor does not create an inaccurate final target dataset by aggregating a raw dataset that contains true duplicates. It is best practice for the SensibleAI Forecast implementor to ask the customer if there are any additional columns of a dataset not being provided that would add uniqueness to each observation.

Expected Target Count

Verify that the target count is in line with the expectation.

No False Data Points

If anomalies are identified, be sure that they are true data points.

Sufficient Data Density

A minimum data density threshold percentage can be difficult to determine. There are some sparse datasets that are highly forecastable with SensibleAI Forecast, while some dense datasets are not. In most cases, however, a denser dataset will be more forecastable. If a given target aggregation and data frequency level is returning a sparse dataset, try aggregating to a higher target dimension or resampling to a lower data frequency (i.e. from daily to weekly, weekly to monthly, etc.).

Target Dimension Value Consistency

Ensure that your target dimension values are clean and consistent (i.e. ‘Appetizers’ for one observation, but ‘Appetizer’ for another OR ‘000010054’ for one observation, but ‘10054’ for another).

Understanding of Column Definitions of Data Logic

Verify the column definitions and data logic to ensure that there are no hidden nuances within the data.

Remarks

Ensuring robust data validation and comprehensive exploration is foundational to the success of any SensibleAI Forecast implementation. By rigorously applying the outlined validation criteria, implementors can confidently proceed into the Rapid Project Experimentation phase, knowing the underlying data is reliable and free from anomalies that could skew results. Clear communication with customers to clarify and confirm data definitions, uniqueness, and consistency is equally vital, laying the groundwork for accurate, insightful, and actionable forecasts. Ultimately, meticulous data quality practices empower implementors and stakeholders alike, unlocking the full predictive potential of SensibleAI Forecast and driving informed, data-driven decision-making.