XPFv4.0.0 Release Notes

Author: Connor Stabnick, Created: 2025-06-12

Release Date: 12 Jun 2025

Release Overview

-

Engine Enhancements

- Support to host web applications

- Web Service

- Web Service is now public instead of private, protected by OIS authentication.

- Restructured API blueprints and endpoints to support URL based versioning

- Webapps - see below

- “Identity Rollover” database refactor

- Done in anticipation of infrastructure migration, this prepares the system better to work with Azure Hyperscale

-

AI Studio

- New Routines added to AI Studio

- Account Recs Anomaly Arena (Limited Availability)

- Coordinates the creation, deletion, and running of the Account Recs Data Monitor Routine instances and Running the Data Rule Evaluation Manager. Has functions to add and remove data rules, as well as trigger the complete scan and evaluation process of all the data monitors. This routine aggregates artifacts produced by the Account Recs Data Monitor Routines. It is the primary integration point between Xperiflow and the AI Plugin for OneStream Financial Close.

- Account Recs Relation Manager (Limited Availability)

- This routine manages the relationships between instances of OneStream Financial Close and Anomaly Arenas. Only one of these Routines should be created in any given environment.

- OneStream Financial Close - Account Recs Data Monitor Routines (Limited Availability)

- Aged Item Commentary

- Integrates with the AI Plugin for OneStream Financial Close, identifying reconciliations containing aged items without explanatory commentary. It produces statistics highlighting older unreconciled items that lack adequate justification.

- Aging Changes

- Integrates with the AI Plugin for OneStream Financial Close, it detects anomalies in changes to aging items compared to historical data. This helps organizations quickly identify unusual trends in aging reconciliations for timely remediation.

- Check Balance I-Items

- Integrates with the AI Plugin within OneStream Financial Close to identify reconciliations simultaneously containing balance-check (B-Item) and manual-adjustment (I-Item) entries. It alerts users to potential inconsistencies requiring further validation.

- I-Doc Sparsity

- Integrates with the AI OneStream Financial Close Plugin to evaluate the regularity of supporting I-Doc attachments to manual entries (I-Items). It flags periods of insufficient documentation on manual entries to the Account Recs solution.

- Manual Auto Rec Ratio

- Integrates with the AI OneStream Financial Close Plugin to identify deviations from historical patterns regarding auto-prepared or auto-approved reconciliations. It supports efficient workflow management by flagging inconsistent use of automated reconciliation.

- Number of Approvers

- Using the AI Plugin, this monitor within OneStream Financial Close detects changes in the number of approvers required for reconciliation approvals.

- Num I-Items

- Integrated via the AI Plugin with OneStream Financial Close, it tracks anomalies in the frequency of manual adjustment entries (I-Items). It flags deviations from historical usage patterns, assisting in pinpointing unusual manual interventions.

- Num Rec Detail Items

- Through OneStream Financial Close and the AI Plugin, this monitor identifies unexpected fluctuations in the quantity of detail items on reconciliations. It maintains consistency and predictability in the reconciliation review process.

- R-Doc Sparsity

- Integrates with the AI OneStream Financial Close Plugin to monitor the consistency of R-Docs attachment at the reconciliation level within OneStream Financial Close.

- Rec Balance

- Integrates with the AI OneStream Financial Close Plugin to analyze reconciliation balances against historical trends, flagging abnormal increases or decreases. This identifying significant variances in balances over time and produces statistics about those variances.

- Rec Detail Text Field Length

- Integrates with the AI OneStream Financial Close Plugin to monitor text length on reconciliation details through AI analysis in OneStream Financial Close to detect decreases in provided explanatory information.

- Repetitive Rejections

- Integrates with the AI OneStream Financial Close Plugin to detect recurring reconciliation rejections across multiple periods or cycles.

- Risk Level Fluctuations

- Integrates with the AI OneStream Financial Close Plugin to detect significant or erratic changes in assigned reconciliation risk levels.

- S-Doc Sparsity

- Integrates with the AI OneStream Financial Close Plugin to track attachment consistency of S-Docs on reconciliations.

- Sign Difference

- Integrates with OneStream Financial Close by using the AI Plugin to identify anomalies in reconciliation balances where the current sign (positive/negative) deviates from historical patterns.

- T-Doc Sparsity

- Integrates with the AI OneStream Financial Close Plugin to evaluate consistency in attaching T-Docs (transaction-level documents) to reconciliations period over period.

- Aged Item Commentary

- Code Scanner

- Used to power the OSDAI integration. This routine enables users to scan all applicable pieces of custom code within their OneStream application for a given set of conditions. The routine comes with 38 predefined conditions to scan for which target frequent causes of performance degradation within the OneStream platform, and allows for custom conditions to be created in order to flag customer-specific coding practices that they wish to avoid.

- Cold Start Modeling

- Cold Start Modeling enables predictions for elements / targets with limited historical data. This trains a global model that learns patterns in each time series, as well as across different time series to inform future predictions. It allows for passing both static and dynamic features to allow the model to make better connections between various target time series.

- Data Monitor Manager

- The data monitor manager routine allows users to interact with different data monitors they have created. Data monitors are functions that perform “scans” on a dataset to provide helpful facts, stats, or other information regarding the dataset. The Data Monitor Manager routine allows for each maintenance and interaction with different data monitors.

- Data Rule Manager

- A Data Rule is a method of running a query/filtering mechanism against 1-N data monitors and 1-N scans for each data monitor to present “flags” to a user. This routine allows for interacting with datarules. A user can create and group datarules to be used for evaluation.

- Data Rule Evaluation Manager

- A routine for evaluating and running data rules against different data monitors and scans. This will allow for different flags to be output based on the rule definition and the scans/monitors it is run against.

- ML Classification

- A classification routine with automated training and prediction workflows. It compares multiple model types using default or user-provided hyperparameters, selects the top performer, tunes its hyperparameters, and saves the optimized model for inference. Supports binary, multi-class, and multi-label classification (up to 5 labels).

- ML Regression

- A regression routine with automated training and prediction workflows. It compares multiple model types using default or user-provided hyperparameters, selects the top performer, tunes its hyperparameters, and saves the optimized model for inference. Supports up to 5 individual target variables.

- Natural Language to SQL

- Enables the translation of natural language to SQL queries. Supports SQL Server and DuckDB dialects. This is used by the latest release of DMA.

- Natural Language Tables Query

- This routine allows users to pose natural language questions to various tabular data sources and receive intelligible, context-rich responses that may include numerical insights or tabular data.

- Operational Data Chat (Private Preview)

- Allows users to convert natural language requests on operational data into SQL queries which are executed against a given database and returned in a context-based conversation. This allows for LLM-driven insights and data analyses optimized for accuracy against the user’s data.

- Training - Bank Account Mgmt

- A simple Stateful Routine to demonstrate how Routines work through a bank account management use case. Intended to be used as part of a training series.

- Target Filtering

- Allows users to filter down prediction data to one model per target based on user inputted filters and flags (Gets created from Target Flagging Filtering in FOR projects).

- Target Flagging Data Monitor

- Allows users to create a ‘scan’ for data rules to be evaluated on. (Gets created from Target Flagging Filtering in FOR projects).

- Data Rule Flagging

- Allows users to evaluate data rules based on a given scan to see if the data rules were met. (Gets created from Target Flagging Filtering in FOR projects).

- Target Flagging Filtering

- Allows users to handle all Monitor section (see Monitor Section (Utilization) below for more information) functionality inside of one routine. Gets created as a part of target data load in FOR projects.

- Target Sub-Sampler

- This new routine enables users to intelligently down sample their dataset by identifying the most representative time series targets using Dynamic Time Warping (DTW), Significance Breakdown Sampling, or Semi-Random Sampling.

- Text Embeddings

- Embeds strings to vector format, to be used by other services. Also provides functionality to perform cosine analysis between a vectorized string array and an input value.

- Account Recs Anomaly Arena (Limited Availability)

- Misc enhancements to existing Routines

- K-means Clustering

- Updated the routine to include the columns ignored by the clustering model in the final data artifacts for both fit and predict. This request came from the consulting team; for example, they can now include an ID column to map back to their original data.

- K-means Clustering

- Component Workflow rewritten as a web app

- Can now push updates much quicker, added support for filter editor component directly in workflow.

- New Routines added to AI Studio

-

AI Forecast

- Hierarchical forecasting

- Users can now specify up to 7 hierarchies using any subset intersection dimensions (including no intersection dims for a “Top Level” target) and a reconciliation method that ensures predictions at the different levels of the hierarchies add up correctly. Doing so will create additional targets for each distinct combination of intersection dims at each hierarchy level.

- For example if the target data set has intersection dims “Region” with values “East” and “West” and “Store” with values 1, 2, 3 and the user specifies “Region” as an additional hierarchy, there will be 2 additional targets created “[Region]East” and “[Region]West”. These targets will go through modeling and predictions like any other target would and all targets will have an additional “ReconciledModel” that ensures P (Prediction value at day x) for ReconciledModel(“[Region]East”) = P for ReconciledModel(“[Region]East~[Store]1”) + P for ReconciledModel(“[Region]East~[Store]2”) + P for ReconciledModel(“[Region]East~[Store]3”)

- Each Hierarchy will have a name and a set of intersection dimensions

- Source Feature Datasets must now specify which hierarchy(s) they should be applied to (if none specified they will be applied to all hierarchies)

- Grouping configuration now has an additional option “isolate to individual hierarchies” to specify whether groups can contain targets from different hierarchies together

- GPT Explanations

- In the prediction summary webapp there is a button in the bottom right that can be clicked on to generate a GPT explanation of the current page which can be used to help users understand how to interpret the data/graph they are seeing.

- Activity Logs

- 4 new web apps generating better insights into jobs and tasks

- Job Progress Bar

- Tiny colored bar in top right of all FOR pages. Updates in real time

- Job Progress Tracker

- Launch dialog for most recent job of the project with insights into the full sub-task tree and error log. Updates in real time

- Pipeline Run Tracker

- See active status of pipeline with many high level stats. View progress of main tasks. Updates in real time

- AI Services Log

- Revamped to include Gantt chart of entire job and task tree over time. Filter by time, user, project, solution, etc.

- Job Progress Bar

- 4 new web apps generating better insights into jobs and tasks

- Prediction Summary Webapp

- Large web app containing model arena viewable after pipeline or in utilization with forecasts. Interact with many pages to get insights about forecasts, prediction explanations, feature impact, and more for any model of a given target. View different error metrics and different frequencies (d, m, q, y).

- Feature Generalization Webapp

- View utilization percentages of features across targets. Filter by hierarchy or target.

- Data Dataset Aggregate Webapp

- Come here to analyze source data. Filter by specific dimensions. Resample to different frequencies. View missing data percentages as well.

- Monitor Section (Utilization)

- Users can now filter down prediction data to select one model per target per forecast.

- Create Flags and Filters to determine how to select the most desired model based on model performance metric thresholds and source data metric thresholds.

- Each Filter has a flag tied to it, representing the first step when filtering down to a specific model.

- After the flag has been analyzed, there are additional fallbacks the user can create in order to further filter through the models.

- If no models are found to fit the desired constraints after fallback evaluations, the user can also select an ultimate fallback model that signifies the model to select if no models where found during the filtering steps.

- Misc bug fixes

- Hierarchical forecasting

-

XAT

- Bulk Identity Inserts

- OneStream PAT Storage mechanism for system user

- User to Group relation view on the User home page

Dependencies

Requires OneStream 9.x to be installed prior to use.

Known Issues

-

Web App Login Refresh

- If users are in an environment for longer than one hour, they will be prompted to login to view any and all webapps every time they enter a new page. Platform currently has a fix for this in PR, and we also have a temporary sufficient workaround for this. If you are upgrading to v4.0.0, please contact Evan for detail on how to get this workaround put in place.

-

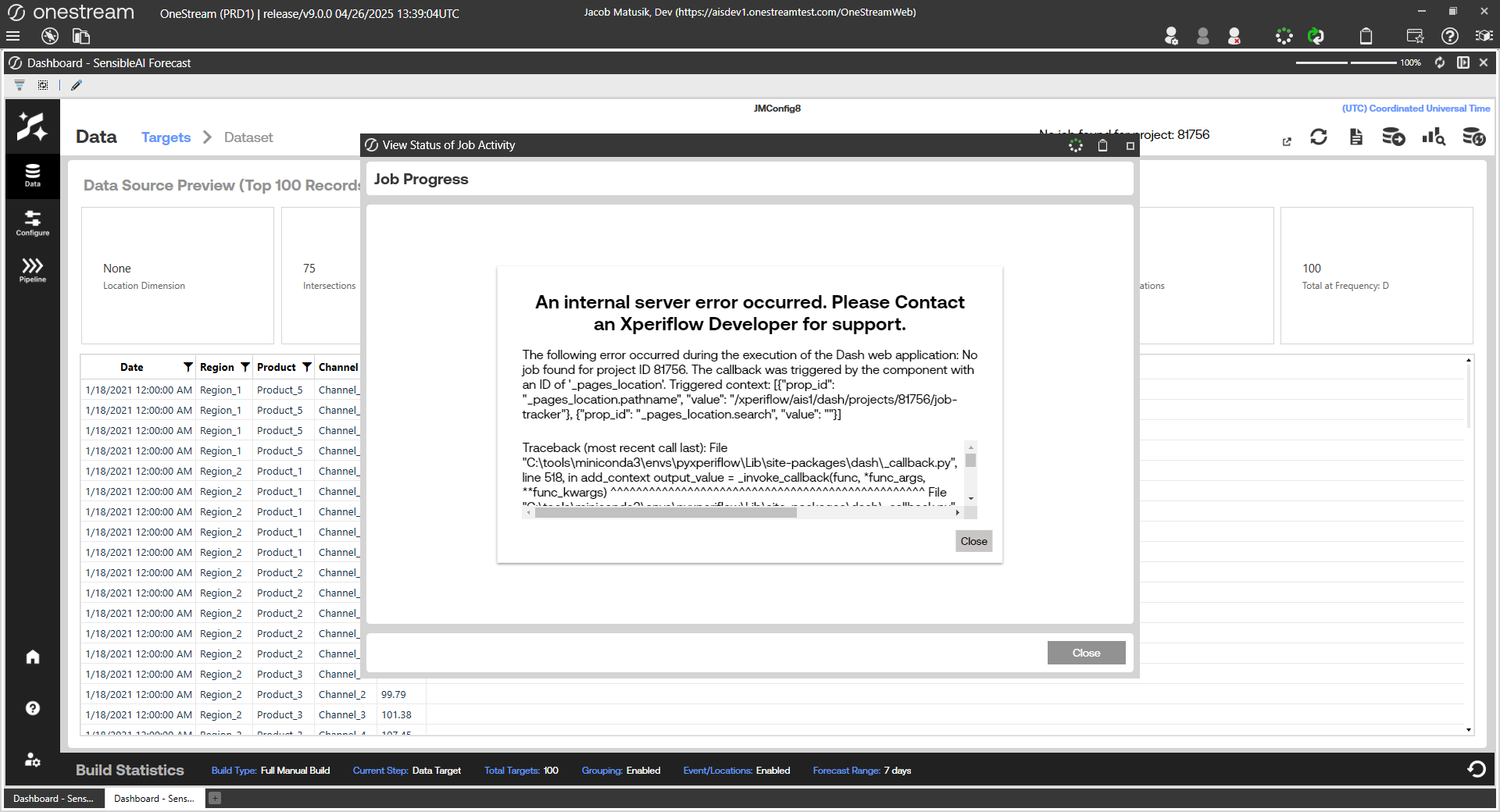

Job Tracker Web App on Project Copies

- When viewing the Job Tracker web app in a project that is a project copy, an error will be raised if no jobs have been run for this project yet. This is expected behavior but the error message is not very user friendly. This is fixed in 4.0.1.